This site is now archived. Please visit the eduPERT Wiki for latest information on eduPERT

eduPERT Endpoint

Performance and Virtual machine: A real case

If you know already how emulation, paravirtualization and other virtualization solutions work and are just looking for a reference to improve a VM performance, check this page: How to Maximize virtio-net performance with vhost-net

What is the matter?

“Virtualization” has become a very used and common word today and not only in clouds.

We have virtual machines on our laptops, many (maybe all) IT departments in Universities install and make available virtual machines instead of real servers.

There are many reasons why the virtualization concept took so much space.

Probably the main one is that gives us the illusion of having much more resources.

Another one is that it breaks the limits of the hardware (if we need a new server, we install a virtual machine and skip all the pain of buying a new physical machine - IaaS) and software (if we need a new application that runs on a specific OS, we just install a vm with that OS and that application running on it - PaaS) and many others like testing a new application or a new OS. If something goes wrong we just erase the vm or because of the easy relocation, virtual machines can be used in disaster recovery scenarios.

There is a huge discussion going on around the duo “Performance and Virtualization” and on whether it is possible to have good performance in a virtualized environment.

When Performance in a virtualized environment is a high requirement, we need to monitor the machines in order to guarantee that the vm is behaving as expected.

Proactive Monitoring

- CPU Utilization

- Memory Utilization

- I/O latency

- Network

But how much can we trust what we see? It can happen that the numbers reported by monitors inside the vm are influenced by the behavior and the choices of the hypervisor. And how do we handle what we can’t see? The path between the mother host and the vm can’t be monitored.

In this scenario determining where an issue is may not be a trivial thing.

Real case: SWITCH DNS Server

The most important performance indexes for a DNS Server are:

a) Number of Queries per second that the server is able to answer without “dropping” (qps).

b) And the time needed to answer a request.

In general all authoritative DNS Servers are virtualized, so is the one in SWITCH.

One of the guys responsible for it at some point noticed very low qps. Too low. In order to understand what was happing, he decided to set up a test environment.

First of all he installed a vm with same characteristics of the real one. He then used an image of traffic seen on the real connection (captured with queryperf). He was able to reproduce the same bad results.

Trying to improve the performance, he tested the vm first with one cpu. Then with two and there was no difference at all!

Then he repeated the same test on a six year old Server and obtaining a qps that was at least twice as better than the one on the VM!!!!

At this point he contacted PERT asking whether we could help him in improving this result.

From his report it was clear that the bottleneck is located somewhere between the Hardware Interface of the mother host and the BIND (DNS software) in the Virtual machine, but we couldn’t say anything more than that. The only thing we could do was making assumptions on where the problem could be.

First Hypothesis

The mother host on which the VM is running had two CPUs Dual-Core and 20GB RAM. Maybe that was not enough.

Suggestion:

Migrating the VM on a machine with more cores

Results:

Not as we hoped…

Running the same test on a machine with 24 logical cores showed almost no improvements…

To make the second suggestion, it was important to know how the hypervisor is structured.

Client Hypervisor

Virtualization techniques can most easily be broken down into three groups:

- Emulation

- Paravirtualization

- Hardware pass-through

Emulation

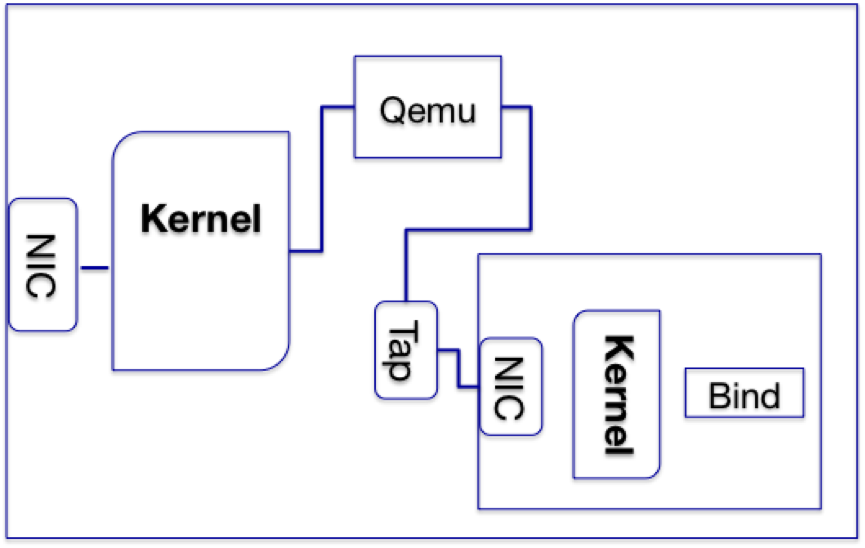

Emulation is probably the

virtualization technique that most of us think about when we think about

hardware virtualization. As its name implies, emulation is where the

hypervisor emulates a certain piece of hardware that it presents to the

guest VM, regardless of what the actual physical hardware is. When

emulation is used, you get the classic benefit of “VM portability,” but

the worst possible performance. This makes sense if you think about it,

because the hypervisor needs to receive the instructions for the fake

hardware it’s emulating and then translate those to whatever the real

hardware needs.

Emulation is probably the

virtualization technique that most of us think about when we think about

hardware virtualization. As its name implies, emulation is where the

hypervisor emulates a certain piece of hardware that it presents to the

guest VM, regardless of what the actual physical hardware is. When

emulation is used, you get the classic benefit of “VM portability,” but

the worst possible performance. This makes sense if you think about it,

because the hypervisor needs to receive the instructions for the fake

hardware it’s emulating and then translate those to whatever the real

hardware needs.

Plus the alternative of involving qemu is very expensive due to context switches to userspace and multiple system calls.

Paravirtualization

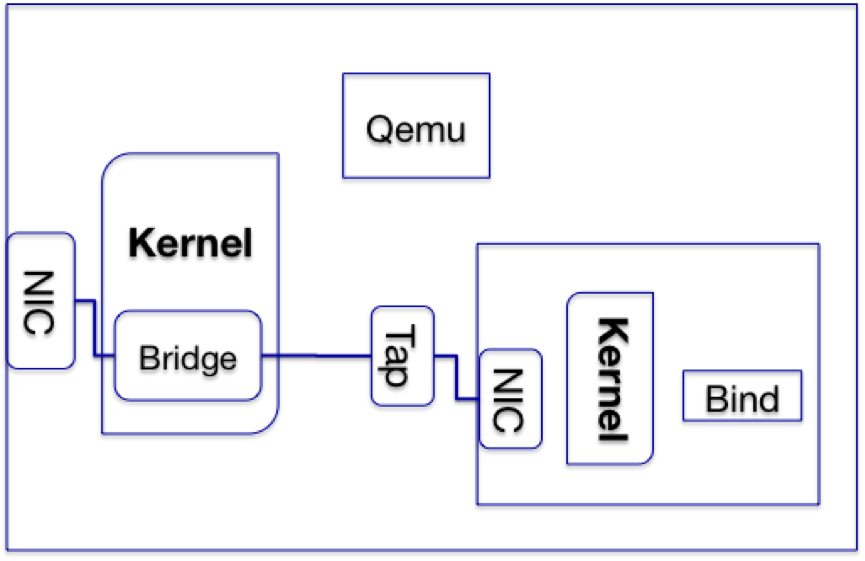

Paravirtualization is a

technique where the hypervisor exposes a modified version of the

physical hardware interface to the guest VM. The idea is that since

emulation “wastes” a lot of computational time doing those translations,

a paravirtualization setup will let the guest VM have special access to

certain aspects of the physical hardware.

Paravirtualization is a

technique where the hypervisor exposes a modified version of the

physical hardware interface to the guest VM. The idea is that since

emulation “wastes” a lot of computational time doing those translations,

a paravirtualization setup will let the guest VM have special access to

certain aspects of the physical hardware.

Bridge in this case is really the ISO term of bridge. It is not something specific for QEMU. Actually, the bridge functionality is provided by Linux kernel, not QEMU - some docs on the net may lead you to believe bridge is a QEMU feature (well, QEMU does have this feature - by utilizing Linux feature, instead of providing the functionality itself).

In theory, paravirtualization is really great. You get good performance and some portability. The downside is that it’s a new way for the guest to access the physical hardware through the hypervisor, so you need hardware, a hypervisor, and a guest OS that are all in agreement on what can be paravirtualized.

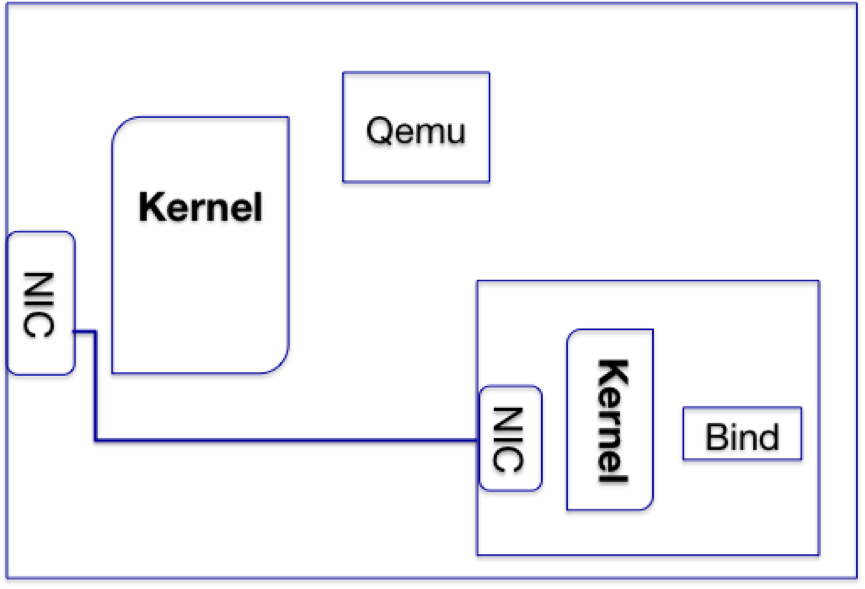

Hardware pass-through

Hardware pass-through means that

the guest VM has direct access to the physical hardware. The hypervisor

literally lets it “pass through” to the hardware. Hardware pass-through

is the best possible performance for the guest VM since it’s

essentially the same speed as if there was no hypervisor at all, but you

lose VM portability across hardware of different types.

Hardware pass-through means that

the guest VM has direct access to the physical hardware. The hypervisor

literally lets it “pass through” to the hardware. Hardware pass-through

is the best possible performance for the guest VM since it’s

essentially the same speed as if there was no hypervisor at all, but you

lose VM portability across hardware of different types.

S econd Hypothesis

The use of Qemu user-space cost too much…

Suggestion:

Paravirtualization.

Use of Virtio-net paravirtual drivers and the linux vhost-net driver.

Results:

Excellent performance!!

Important

:

Both host and VM were running Debian stable (squeeze) + Backports for Kernel.

Backported kernel was essential because it supports the paravirtualization. The VM was a KVM and the solution reported here is tailored to this virtualization infrastructure.